Table of contents

- What is the Difference between an Image, Container and Engine?

- What is the Difference between the Docker command COPY vs ADD?

- What is the Difference between the Docker command CMD vs RUN?

- How Will you reduce the size of the Docker image?

- Why and when to use Docker?

- Explain the Docker components and how they interact with each other.

- Explain the terminology: Docker Compose, Docker File, Docker Image, Docker Container?

- Docker vs. Hypervisor?

- What are the advantages and disadvantages of using docker?

- What is a Docker registry?

- What is an entry point?

- How to implement CI/CD in Docker?

- Will data on the container be lost when the docker container exits?

- What is a Docker swarm?

- What are the common Docker practices to reduce the size of Docker Images?

- How to Enable IPV6 Network in Docker Containers?

- How to Deploy a Registry Server?

- DOCKER SWARM

- Create a swarm

- Add nodes to the swarm

- Deploy a service to the swarm

- Inspect a service on the swarm

- Scale the service in the swarm

- Delete the service running on the swarm

- Apply rolling updates to a service

- What is Docker Volume?

- Volumes

What is the Difference between an Image, Container and Engine?

Image

It is a read-only template that contains only necessary files and dependencies that can run an application. It contains a docker file that has all the set of instructions to build an application. An image can be considered a snapshot of a particular version of the application and can be built for making one or more containers.

Container

It is an instance of the image which can be run in an isolated environment.

Multiple containers can be made with the same image each one having a separate isolated file system and network stacks. It is lightweight and portable and can run any application, anywhere.

Engine

A container engine like docker or Kubernetes is used for the orchestration of the containers. This engine provides a set of APIs for building, deploying and managing the containers for starting and stopping the containers, monitoring their health, and scaling up and scaling down according to the requirements.

Conclusion

The image is a blueprint of the application, the container is the running instance of the application, and the container engine is the software that manages the application.

What is the Difference between the Docker command COPY vs ADD?

ADD Command can use a URL as a source whereas COPY Command can use only a local file system to copy.ADD Command can be used to copy tar or zip files and automatically extract contents but copy command cannot.ADD supports the permissions of chown files where COPY Command does not

Although ADD Command has additional features it comes with the cost of not being transparent and it seems quite harder hence we use COPY Command for simpler tasks and use ADD Commands only when it is necessary.

FROM ubuntu:latest

WORKDIR /app

COPY . /app

CMD ["python", "app.py"]

FROM node:14-alpine

WORKDIR /app

ADD https://github.com/example/example/archive/master.tar.gz /app/

RUN tar -xzf /app/master.tar.gz --strip-components=1 && \

rm /app/master.tar.gz

CMD ["npm", "start"]

What is the Difference between the Docker command CMD vs RUN?

The RUN command is used during the image-building process whereas CMD Command is used after the image is built. RUN Command creates a new layer in the image. It is used for installing dependencies, setting up environment variables and performing other built-time tasks. Only one CMD Command can be used in the docker file.

FROM python:3.8-slim

RUN apt-get update && \

apt-get install -y git && \

pip install requests

FROM python:3.8-slim

WORKDIR /app

COPY . .

CMD ["python", "app.py"]

How Will you reduce the size of the Docker image?

1. Using Minimal base images

For example, using alpine images which are relatively smaller also using distress images which are a stripped-down version of current images.

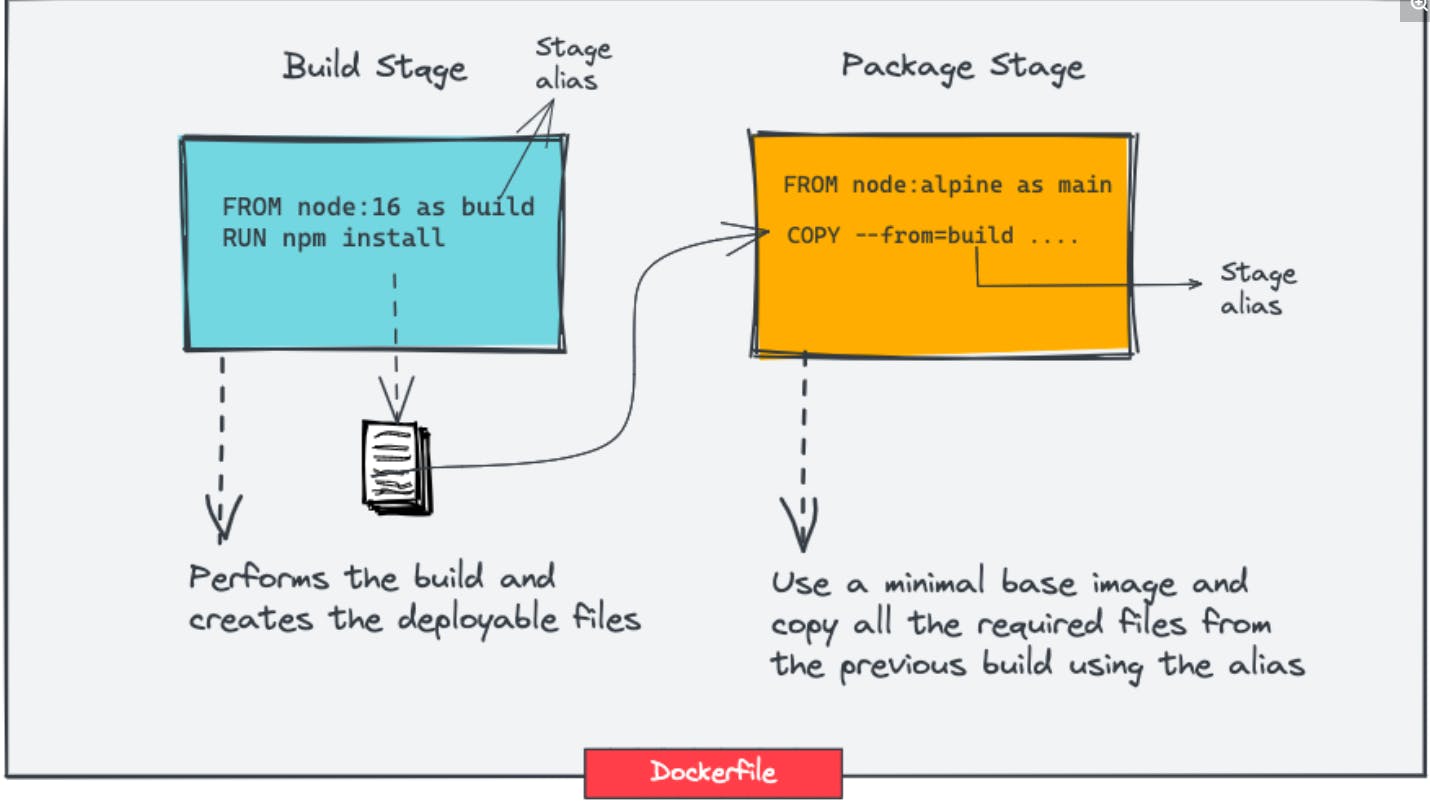

2. Use Docker MultiStage Builds

Here we use multiple docker files to reduce the size of the image. The second docker file uses the dependencies and files which are already being built in the first one. Also, in a single

Dockerfile, you can have multiple stages with different base images. For example, you can have different stages for build, test, static analysis, and package with different base images.

FROM node:16 as build

WORKDIR /app

COPY package.json index.js env ./

RUN npm install

FROM node:alpine as main

COPY --from=build /app /

EXPOSE 8080

CMD ["index.js"]

Minimizing the number of layers

We can minimize the number of layers by combining the installations of packages and dependencies for example in a RUN Command.

Understanding Caching

Docker uses the caching technique so that the dependencies which are already built need not be built again when RUN again it will depend on how the Dockerfile is written. For example:

FROM ubuntu:latest ENV DEBIAN_FRONTEND=noninteractive RUN apt-get update -y RUN apt-get upgrade -y RUN apt-get install vim -y RUN apt-get install net-tools -y RUN apt-get install dnsutils -y COPY . .FROM ubuntu:latest COPY . . ENV DEBIAN_FRONTEND=noninteractive RUN apt-get update -y RUN apt-get upgrade -y RUN apt-get install vim -y RUN apt-get install net-tools -y RUN apt-get install dnsutils -yThe first way due to the better placement of the COPY Command the caching method will be used in a better way.

Use.dockerignore file

Keep Application data elsewhere

Storing the application data with docker Volume apart from the application is a good practice to avoid large-size files.

Why and when to use Docker?

Docker is used for the containerization of applications so that applications can be developed, shipped and run more efficiently and quickly. It allows consistency, portability, efficiency, isolation, scalability, and security.

It can be used for development and testing, CI/CD, microservice architecture, and cloud-native applications.

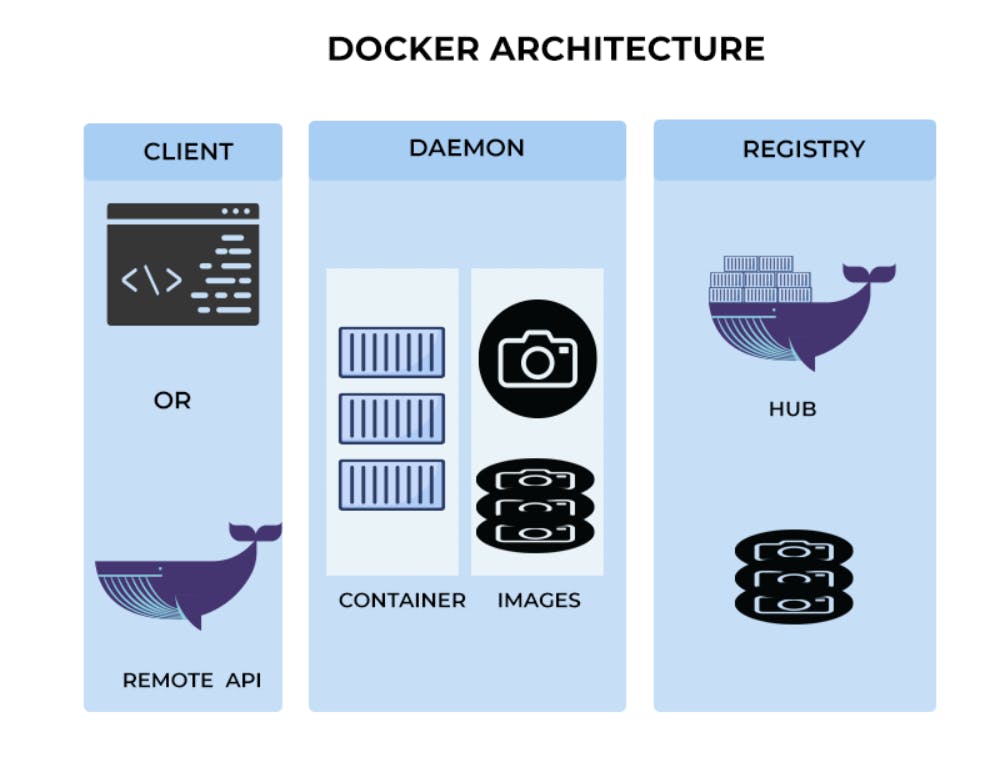

Explain the Docker components and how they interact with each other.

Docker Daemon

It is used for managing docker images, containers, networks and volumes. It listens to docker API Requests and executes them.

Docker Client

It is a command line tool to interact with the docker daemon. It sends the commands to the docker daemon using the Docker API and displays the results.

Docker Images

It is a read-only template that contains all the dependencies required to run an application. It includes the application code, runtime, system tools, libraries, and settings. Docker images are created using a Dockerfile, which specifies the instructions for building the image.

Docker Registry

It is a central repository that stores Docker images. Docker Hub is the default registry used by Docker, but users can create their own private registries.

Docker Containers

It is a runtime instance of a Docker image. Containers are isolated environments that run on top of the host machine's operating system. They have their own file system, network interfaces, and process space.

Explain the terminology: Docker Compose, Docker File, Docker Image, Docker Container?

Docker is a containerization technology that allows developers to package an application along with its dependencies into a container that can run consistently across different environments. The following are the main terminologies used in Docker:

Docker Compose: Docker Compose is a tool that allows developers to define and run multi-container Docker applications. It uses a YAML file to define the services, networks, and volumes required for the application and can be used to start, stop, and scale the application.

Dockerfile: A Dockerfile is a text file that contains a set of instructions that are used to build a Docker image. The Dockerfile specifies the base image to use, the dependencies to install, and any configuration required for the application to run. The Dockerfile is used by the Docker engine to build an image that can be used to create a container.

Docker Image: A Docker image is a read-only template that contains everything required to run an application. It includes the application code, runtime, system tools, libraries, and settings. Docker images are built from a Dockerfile and can be stored in a Docker registry.

Docker Container: A Docker container is a runtime instance of a Docker image. It is an isolated environment that runs on top of the host machine's operating system. Docker containers are created from Docker images and can be started, stopped, and deleted.

Docker vs. Hypervisor?

Docker and hypervisor are both technologies used for virtualization, but they serve different purposes and have different architectures. Here's a comparison between Docker and a traditional hypervisor:

**1. Purpose:

Docker: Docker is a containerization platform that allows you to package applications and their dependencies into a standardized unit called a container. Containers share the host OS kernel and run in isolation from each other. They are lightweight and designed for process-level isolation.

Hypervisor: A hypervisor, on the other hand, is a virtualization platform that allows you to run multiple operating systems (known as virtual machines) on a single physical machine. Each virtual machine has its own complete OS and emulates the underlying hardware.

2. Resource Utilization:

Docker: Docker containers share the host OS kernel, which means they are more lightweight and consume fewer resources compared to virtual machines.

Hypervisor: Virtual machines have their own complete OS, which consumes more resources (memory, CPU, storage) compared to containers.

3. Portability and Isolation:

Docker: Containers are highly portable and can run on any system that supports Docker, as long as it has the Docker runtime installed. They provide process-level isolation, which means they share the host OS but have their own isolated file system, networking, and process space.

Hypervisor: Virtual machines are less portable because they require a hypervisor to run, and the virtual machine image is specific to the hypervisor being used. They provide full isolation, as each virtual machine has its own complete OS.

4. Performance:

Docker: Due to the lightweight nature of containers, Docker typically offers better performance compared to virtual machines.

Hypervisor: Running a complete OS for each virtual machine can result in higher resource overhead and potentially slower performance compared to containers.

5. Use Cases:

Docker: Docker is well-suited for applications that can be easily packaged with their dependencies. It is commonly used for microservices, cloud-native applications, and environments where fast deployment and scaling are important.

Hypervisor: Hypervisors are used in scenarios where you need to run multiple different operating systems on the same physical machine. This is common in scenarios where you need to isolate workloads, run legacy applications, or have strict security requirements.

6. Ecosystem:

Docker: Docker has a rich ecosystem of tools and services for building, managing, and deploying containers. This includes Docker Compose, Docker Swarm, and integration with container orchestration platforms like Kubernetes.

Hypervisor: Hypervisors have their own ecosystems of tools for managing virtual machines, but they tend to be more focused on virtualization management.

In summary, Docker and hypervisor serve different virtualization needs. Docker is focused on containerization for lightweight, portable, and scalable application deployment, while hypervisors are used for running multiple complete operating systems on a single physical machine. In some scenarios, they may even be used together, with Docker containers running inside virtual machines for additional isolation.

What are the advantages and disadvantages of using docker?

Using Docker comes with several advantages and disadvantages. Here's a breakdown of both:

Advantages of Docker:

Isolation and Consistency:

- Docker containers provide process-level isolation, ensuring that applications and their dependencies run consistently across different environments.

Portability:

- Docker containers can run on any system that supports Docker, providing a consistent environment for applications regardless of the underlying infrastructure.

Resource Efficiency:

- Containers share the host OS kernel, which makes them more lightweight compared to virtual machines. This leads to faster startup times and better resource utilization.

Rapid Deployment:

- Containers can be started and stopped quickly, allowing for rapid deployment and scaling of applications.

Microservices and Modular Architecture:

- Docker facilitates the development and deployment of microservices, allowing for easy scaling and management of individual components of an application.

Easy Integration with CI/CD:

- Docker containers can be easily integrated into continuous integration/continuous deployment (CI/CD) pipelines, automating the testing and deployment process.

Version Control and Rollbacks:

- Docker images can be versioned, making it easy to roll back to a previous version if there are issues with a new release.

Isolation of Dependencies:

- Docker containers encapsulate an application and its dependencies, reducing potential conflicts between different applications running on the same host.

Ecosystem and Community:

- Docker has a large and active community, which means there is a wealth of resources, documentation, and third-party tools available.

Disadvantages of Docker:

Limited OS Support:

- Docker primarily supports Linux, although it can be used on Windows and macOS through a virtualization layer. However, this additional layer can introduce some performance overhead.

Security Concerns:

- While Docker provides isolation, misconfiguration or vulnerabilities in container images can still pose security risks. Proper security practices are essential.

Learning Curve:

- Docker introduces a new set of concepts (containers, images, volumes, etc.) that may require some learning for those new to containerization.

Persistence and Data Management:

- Containers are typically designed to be stateless, which can complicate data management. Special considerations and tools (like Docker Volumes) are needed for persistent storage.

Complex Networking:

- While Docker provides powerful networking capabilities, configuring complex network setups may require a deeper understanding of networking concepts.

Limited GUI Applications:

- Docker is primarily designed for running command-line applications. Running GUI-based applications within Docker containers can be more complex.

Resource Contention:

- If not properly managed, Docker containers on a host machine can compete for resources, potentially leading to performance issues.

Size of Images:

- Docker images can be relatively large, especially if they include a full operating system. This can lead to longer image upload and download times.

What is a Docker namespace?

In Docker, a namespace is a feature of the Linux kernel that provides process isolation and resource control. Namespaces are used to create isolated environments for various system resources, such as processes, network interfaces, filesystems, and more. Docker leverages these namespaces to provide containerization and isolation for its containers.

Here are some of the important namespaces used by Docker:

PID Namespace: This namespace isolates processes within a container. Each container has its separate process ID (PID) namespace, which means that processes running inside the container are isolated from processes running on the host and other containers.

Network Namespace: Docker uses network namespaces to create isolated network stacks for containers. Each container has its network namespace, allowing it to have its network interfaces, IP addresses, routing tables, and firewall rules.

Mount Namespace: Mount namespaces isolate the filesystems seen by processes inside a container. This means that containers have their isolated filesystem views, even though they may share the host's kernel.

UTS Namespace: UTS namespaces isolate the hostname and domain name for processes within a container. This allows containers to have their own hostname and domain name separate from the host system.

IPC Namespace: IPC (Inter-Process Communication) namespaces isolate various inter-process communication mechanisms like message queues and semaphores. This isolation prevents processes in one container from interfering with the IPC mechanisms of another container.

User Namespace: User namespaces provide user and group ID mapping between the container and the host. This allows a process inside a container to have its own user and group IDs that may be different from the host's, enhancing security.

These namespaces collectively contribute to the isolation and separation of Docker containers from each other and the host system. They ensure that processes running inside a container cannot directly interact with or affect processes in other containers or on the host.

Namespace isolation is one of the key features that make Docker containers lightweight, efficient, and secure, as it allows multiple containers to share the same kernel while maintaining isolation at the process, network, filesystem, and other resource levels, Let's illustrate the concept of Docker namespaces with a simple example:

Suppose you have two Docker containers, Container_A and Container_B, running on the same host system.

PID Namespace:

Container_Ahas its own PID namespace. InsideContainer_A, processes are assigned PIDs starting from 1, which is unique toContainer_A.Similarly,

Container_Bhas its own PID namespace, and processes inside it have PIDs starting from 1, which are unique toContainer_B.

Network Namespace:

Container_AandContainer_Beach has their isolated network namespaces.They can have their network interfaces, IP addresses, and firewall rules. For instance,

Container_Amight have an IP address of172.17.0.2andContainer_Bmight have172.17.0.3.

Mount Namespace:

Inside

Container_A, the root filesystem is isolated. What appears as/insideContainer_Ais different from what appears as/on the host system or inContainer_B.This means that

Container_Ahas its view of the filesystem.

UTS Namespace:

Container_Acan have its hostname, saycontainerA. So, if you runhostnamecommand insideContainer_A, it will returncontainer, while on the host system, it might return a different hostname.

IPC Namespace:

Container_AandContainer_Bhas its own isolated inter-process communication mechanisms. They cannot directly interact via shared memory segments, semaphores, or message queues.

User Namespace:

User namespaces allow the mapping of user and group IDs inside the container to different IDs outside the container.

For example, a process running as UID 1000 inside

Container_Amight be mapped to a different UID outside the container.

These namespaces ensure that processes inside Container_A are isolated from processes in Container_B and on the host system. Each container operates in its separate namespace, which provides a level of isolation and security.

Keep in mind that this is a simplified example. Docker also employs other techniques and features to enhance security and isolation, such as groups for resource control and capabilities for fine-grained permissions.

What is a Docker registry?

Docker Registry:

- A Docker registry is a storage and distribution system for Docker images. It's like a repository where you can store and manage Docker images. The most well-known public Docker registry is Docker Hub, but you can also set up private registries for your organization's use. These registries allow you to share and distribute container images across different systems.

What is an entry point?

Entry Point:

In the context of a Docker container, the entry point is the command that is executed when the container starts. It defines the default behavior of the container. You can specify an entry point in a Dockerfile, or override it when running a container.

How to implement CI/CD in Docker?

Implementing CI/CD in Docker:

Implementing Continuous Integration/Continuous Deployment (CI/CD) with Docker involves using tools and practices to automate the building, testing, and deploying of Docker containers. Here are some steps to implement CI/CD with Docker:

Version Control: Store your code in a version control system like Git.

Automated Builds: Set up a CI/CD system (e.g., Jenkins, GitLab CI/CD, GitHub Actions) to automatically build Docker images whenever code changes are pushed to the repository.

Automated Testing: Run automated tests on the Docker image to ensure it meets quality standards.

Image Tagging: Use a tagging strategy (e.g., version numbers, commit hashes) to label your Docker images for easy identification.

Artifact Registry: Store the built Docker images in a Docker registry (e.g., Docker Hub, private registry).

Deployment: Automate the deployment of Docker containers using container orchestration platforms like Kubernetes, Docker Swarm, or tools like Docker Compose.

Will data on the container be lost when the docker container exits?

Data Persistence in Docker:

- By default, data inside a Docker container is not persistent. Any changes made to the container's filesystem are lost when the container exits. To persist data, you can use Docker Volumes or bind mounts to link a directory on the host system to a directory inside the container. This allows data to be stored on the host system and shared with the container.

What is a Docker swarm?

Docker Swarm:

- Docker Swarm is a container orchestration platform provided by Docker. It allows you to create and manage a cluster of Docker hosts (nodes) to deploy and manage containerized applications. Swarm provides features for load balancing, service discovery, and scaling. It's a simpler alternative to Kubernetes for orchestrating Docker containers.

What are the common Docker practices to reduce the size of Docker Images?

Reducing the size of Docker images is crucial for optimizing storage space, speeding up image downloads, and improving deployment times. Here are some common Docker practices to help reduce image size:

Use Minimal Base Images:

- Start with a minimal base image that contains only the essentials needed for your application. Alpine Linux and distress images are popular choices for lightweight base images.

Multi-Stage Builds:

- Utilize multi-stage builds to separate the build environment from the runtime environment. This allows you to build dependencies in one image and then copy only the necessary artifacts to a smaller final image.

Avoid Unnecessary Dependencies:

- Remove any unnecessary packages, libraries, or files from the image. Only include what is required for the application to run.

Optimize Dockerfile Layers:

- Combine multiple RUN commands into a single command to reduce the number of layers created. This can be achieved using && to chain commands.

Clean Up After Installations:

- Remove temporary files, cache, and package manager metadata after installing dependencies to reduce the image size. Use

apt-get cleanor equivalent commands for package managers.

- Remove temporary files, cache, and package manager metadata after installing dependencies to reduce the image size. Use

Use .dockerignore File:

- Similar to .gitignore, the .dockerignore file allows you to specify files and directories that should not be included in the Docker build context. This can help exclude unnecessary files from the image.

Minimize Installed Tools and Packages:

- Only install tools and packages that are essential for the application to run. Avoid including debugging tools or unnecessary utilities.

Use COPY Wisely:

- Be selective when using the COPY command in your Dockerfile. Only copy files that are needed for the application to run, and consider using .dockerignore to exclude unnecessary files.

Avoid Using the Latest Tags:

- Avoid using

latesttags for base images. Specify a specific version or digest to ensure consistency and prevent unexpected changes in the base image.

- Avoid using

Use Alpine Versions of Packages:

- If using Alpine Linux, choose Alpine-compatible versions of packages, as they tend to be more lightweight than their full-featured counterparts.

Compress Artifacts:

- If you're including large files or archives, compress them before adding them to the image. Decompress them during the build process.

Avoid Using Unnecessary Services:

- If possible, avoid running additional services (e.g., SSH, syslog) within the container, as they add unnecessary overhead.

By following these practices, you can create Docker images that are more efficient in terms of size, making them easier to distribute and deploy. Keep in mind that optimizing image size may require some experimentation and tuning based on the specific requirements of your application.

How to Enable IPV6 Network in Docker Containers?

Create an IPv6 network

The following steps show you how to create a Docker network that uses IPv6.

Edit the Docker daemon configuration file, located at

/etc/docker/daemon.json. Configure the following parameters:{ "experimental": true, "ip6tables": true }ip6tablesenables additional IPv6 packet filter rules, providing network isolation and port mapping. This parameter requires theexperimentalto be set totrue.Save the configuration file.

Restart the Docker daemon for your changes to take effect.

$ sudo systemctl restart dockerCreate a new IPv6 network.

Using

docker network create:$ docker network create --ipv6 --subnet 2001:0DB8::/112 ip6netUsing a Docker Compose file:

networks: ip6net: enable_ipv6: true ipam: config: - subnet: 2001:0DB8::/112

You can now run containers that attach to the ip6net network.

$ docker run --rm --network ip6net -p 80:80 traefik/whoami

This publishes port 80 on both IPv6 and IPv4. You can verify the IPv6 connection by running curl, connecting to port 80 on the IPv6 loopback address:

$ curl http://[::1]:80

Hostname: ea1cfde18196

IP: 127.0.0.1

IP: ::1

IP: 172.17.0.2

IP: fe80::42:acff:fe11:2

RemoteAddr: [fe80::42:acff:fe11:2]:54890

GET / HTTP/1.1

Host: [::1]

User-Agent: curl/8.1.2

Accept: */*

Use IPv6 for the default bridge network

The following steps show you how to use IPv6 on the default bridge network.

Edit the Docker daemon configuration file, located at

/etc/docker/daemon.json. Configure the following parameters:content_copy

{ "ipv6": true, "fixed-cidr-v6": "2001:db8:1::/64", "experimental": true, "ip6tables": true }ipv6enables IPv6 networking on the default network.fixed-cidr-v6assigns a subnet to the default bridge network, enabling dynamic IPv6 address allocation.ip6tablesenables additional IPv6 packet filter rules, providing network isolation and port mapping. This parameter requiresexperimentalto be set totrue.

Save the configuration file.

Restart the Docker daemon for your changes to take effect.

$ sudo systemctl restart docker

You can now run containers on the default bridge network.

$ docker run --rm -p 80:80 traefik/whoami

This publishes port 80 on both IPv6 and IPv4. You can verify the IPv6 connection by making a request to port 80 on the IPv6 loopback address:

$ curl http://[::1]:80

Hostname: ea1cfde18196

IP: 127.0.0.1

IP: ::1

IP: 172.17.0.2

IP: fe80::42:acff:fe11:2

RemoteAddr: [fe80::42:acff:fe11:2]:54890

GET / HTTP/1.1

Host: [::1]

User-Agent: curl/8.1.2

Accept: */*

Dynamic IPv6 subnet allocation

If you don't explicitly configure subnets for user-defined networks, using docker network create --subnet=<your-subnet>, those networks use the default address pools of the daemon as a fallback. The default address pools are all IPv4 pools. This also applies to networks created from a Docker Compose file, with enable_ipv6 set to true.

To enable dynamic subnet allocation for user-defined IPv6 networks, you must manually configure address pools of the daemon to include:

The default IPv4 address pools

One or more IPv6 pools of your own

The default address pool configuration is:

{

"default-address-pools": [

{ "base": "172.17.0.0/16", "size": 16 },

{ "base": "172.18.0.0/16", "size": 16 },

{ "base": "172.19.0.0/16", "size": 16 },

{ "base": "172.20.0.0/14", "size": 16 },

{ "base": "172.24.0.0/14", "size": 16 },

{ "base": "172.28.0.0/14", "size": 16 },

{ "base": "192.168.0.0/16", "size": 20 }

]

}

The following example shows a valid configuration with the default values and an IPv6 pool. The IPv6 pool in the example provides up to 256 IPv6 subnets of size /112, from an IPv6 pool of prefix length /104. Each /112-sized subnet supports 65 536 IPv6 addresses.

{

"default-address-pools": [

{ "base": "172.17.0.0/16", "size": 16 },

{ "base": "172.18.0.0/16", "size": 16 },

{ "base": "172.19.0.0/16", "size": 16 },

{ "base": "172.20.0.0/14", "size": 16 },

{ "base": "172.24.0.0/14", "size": 16 },

{ "base": "172.28.0.0/14", "size": 16 },

{ "base": "192.168.0.0/16", "size": 20 },

{ "base": "2001:db8::/104", "size": 112 }

]

}

How to Deploy a Registry Server?

A registry is an instance of the registry image, and runs within Docker.

Use a command like the following to start the registry container:

$ docker run -d -p 5000:5000 --restart=always --name registry registry:2The registry is now ready to use.

Copy an image from Docker Hub to your registry

You can pull an image from Docker Hub and push it to your registry. The following example pulls the

ubuntu:16.04image from Docker Hub and re-tags it asmy-ubuntu, then pushes it to the local registry. Finally, theubuntu:16.04andmy-ubuntuimages are deleted locally and themy-ubuntuimage is pulled from the local registry.Pull the

ubuntu:16.04image from Docker Hub.$ docker pull ubuntu:16.04Tag the image as

localhost:5000/my-ubuntu. This creates an additional tag for the existing image. When the first part of the tag is a hostname and port, Docker interprets this as the location of a registry, when pushing.$ docker tag ubuntu:16.04 localhost:5000/my-ubuntuPush the image to the local registry running at

localhost:5000:$ docker push localhost:5000/my-ubuntuRemove the locally-cached

ubuntu:16.04andlocalhost:5000/my-ubuntuimages, so that you can test pulling the image from your registry. This does not remove thelocalhost:5000/my-ubuntuimage from your registry.$ docker image remove ubuntu:16.04 $ docker image remove localhost:5000/my-ubuntuPull the

localhost:5000/my-ubuntuimage from your local registry.$ docker pull localhost:5000/my-ubuntu

Stop a local registry

To stop the registry, use the same docker container stop command as with any other container.

$ docker container stop registry

To remove the container, use docker container rm.

$ docker container stop registry && docker container rm -v registry

Basic configuration

To configure the container, you can pass additional or modified options to the docker run command.

The following sections provide basic guidelines for configuring your registry. For more details, see the registry configuration reference.

Start the registry automatically

If you want to use the registry as part of your permanent infrastructure, you should set it to restart automatically when Docker restarts or if it exits. This example uses the --restart always flag to set a restart policy for the registry.

$ docker run -d \

-p 5000:5000 \

--restart=always \

--name registry \

registry:2

Customize the published port

If you are already using port 5000, or you want to run multiple local registries to separate areas of concern, you can customize the registry's port settings. This example runs the registry on port 5001 and also names it registry-test. Remember, the first part of the -p value is the host port and the second part is the port within the container. Within the container, the registry listens on port 5000 by default.

$ docker run -d \

-p 5001:5000 \

--name registry-test \

registry:2

If you want to change the port the registry listens on within the container, you can use the environment variable REGISTRY_HTTP_ADDR to change it. This command causes the registry to listen on port 5001 within the container:

$ docker run -d \

-e REGISTRY_HTTP_ADDR=0.0.0.0:5001 \

-p 5001:5001 \

--name registry-test \

registry:2

Storage customization

Customize the storage location

By default, your registry data is persisted as a docker volume on the host filesystem. If you want to store your registry contents at a specific location on your host filesystem, such as if you have an SSD or SAN mounted into a particular directory, you might decide to use a bind mount instead. A bind mount is more dependent on the filesystem layout of the Docker host, but more performant in many situations. The following example binds the host directory /mnt/registry into the registry container at /var/lib/registry/.

$ docker run -d \

-p 5000:5000 \

--restart=always \

--name registry \

-v /mnt/registry:/var/lib/registry \

registry:2

DOCKER SWARM

Create a swarm

Make sure the Docker Engine daemon is started on the host machines.

Open a terminal and ssh into the machine where you want to run your manager node. This tutorial uses a machine named

manager1.Run the following command to create a new swarm:

$ docker swarm init --advertise-addr <MANAGER-IP>In the tutorial, the following command creates a swarm on the

manager1machine:$ docker swarm init --advertise-addr 192.168.99.100 Swarm initialized: current node (dxn1zf6l61qsb1josjja83ngz) is now a manager. To add a worker to this swarm, run the following command: docker swarm join \ --token SWMTKN-1-49nj1cmql0jkz5s954yi3oex3nedyz0fb0xx14ie39trti4wxv-8vxv8rssmk743ojnwacrr2e7c \ 192.168.99.100:2377 To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.The

--advertise-addrflag configures the manager node to publish its address as192.168.99.100. The other nodes in the swarm must be able to access the manager at the IP address.The output includes the commands to join new nodes to the swarm. Nodes will join as managers or workers depending on the value for the

--tokenflag.Run

docker infoto view the current state of the swarm:$ docker info Containers: 2 Running: 0 Paused: 0 Stopped: 2 ...snip... Swarm: active NodeID: dxn1zf6l61qsb1josjja83ngz Is Manager: true Managers: 1 Nodes: 1 ...snip...Run the

docker node lscommand to view information about nodes:$ docker node ls ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS dxn1zf6l61qsb1josjja83ngz * manager1 Ready Active LeaderThe

*next to the node ID indicates that you're currently connected on this node.Docker Engine swarm mode automatically names the node with the machine host name. The tutorial covers other columns in later steps.

Add nodes to the swarm

Once you've created a swarm with a manager node, you're ready to add worker nodes.

Open a terminal and ssh into the machine where you want to run a worker node. This tutorial uses the name

worker1.Run the command produced by the

docker swarm initoutput from the Create a swarm tutorial step to create a worker node joined to the existing swarm:$ docker swarm join \ --token SWMTKN-1-49nj1cmql0jkz5s954yi3oex3nedyz0fb0xx14ie39trti4wxv-8vxv8rssmk743ojnwacrr2e7c \ 192.168.99.100:2377 This node joined a swarm as a worker.If you don't have the command available, you can run the following command on a manager node to retrieve the join command for a worker:

$ docker swarm join-token worker To add a worker to this swarm, run the following command: docker swarm join \ --token SWMTKN-1-49nj1cmql0jkz5s954yi3oex3nedyz0fb0xx14ie39trti4wxv-8vxv8rssmk743ojnwacrr2e7c \ 192.168.99.100:2377Open a terminal and ssh into the machine where you want to run a second worker node. This tutorial uses the name

worker2.Run the command produced by the

docker swarm initoutput from the Create a swarm tutorial step to create a second worker node joined to the existing swarm:$ docker swarm join \ --token SWMTKN-1-49nj1cmql0jkz5s954yi3oex3nedyz0fb0xx14ie39trti4wxv-8vxv8rssmk743ojnwacrr2e7c \ 192.168.99.100:2377 This node joined a swarm as a worker.Open a terminal and ssh into the machine where the manager node runs and run the

docker node lscommand to see the worker nodes:$ docker node ls ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS 03g1y59jwfg7cf99w4lt0f662 worker2 Ready Active 9j68exjopxe7wfl6yuxml7a7j worker1 Ready Active dxn1zf6l61qsb1josjja83ngz * manager1 Ready Active LeaderThe

MANAGERcolumn identifies the manager nodes in the swarm. The empty status in this column forworker1andworker2identifies them as worker nodes.Swarm management commands like

docker node lsonly work on manager nodes.Deploy a service to the swarm

After you create a swarm, you can deploy a service to the swarm. For this tutorial, you also added worker nodes, but that is not a requirement to deploy a service.

Open a terminal and ssh into the machine where you run your manager node. For example, the tutorial uses a machine named

manager1.Run the following command:

$ docker service create --replicas 1 --name helloworld alpine ping docker.com 9uk4639qpg7npwf3fn2aasksrThe

docker service createcommand creates the service.The

--nameflag names the servicehelloworld.The

--replicasflag specifies the desired state of 1 running instance.The arguments

alpine pingdocker.comdefine the service as an Alpine Linux container that executes the commandpingdocker.com.

Run

docker service lsto see the list of running services:$ docker service ls ID NAME SCALE IMAGE COMMAND 9uk4639qpg7n helloworld 1/1 alpine ping docker.com

Inspect a service on the swarm

When you have deployed a service to your swarm, you can use the Docker CLI to see details about the service running in the swarm.

If you haven't already, open a terminal and ssh into the machine where you run your manager node. For example, the tutorial uses a machine named

manager1.Run

docker service inspect --pretty <SERVICE-ID>to display the details about a service in an easily readable format.To see the details on the

helloworldservice:[manager1]$ docker service inspect --pretty helloworld ID: 9uk4639qpg7npwf3fn2aasksr Name: helloworld Service Mode: REPLICATED Replicas: 1 Placement: UpdateConfig: Parallelism: 1 ContainerSpec: Image: alpine Args: ping docker.com Resources: Endpoint Mode: vipTip: To return the service details in json format, run the same command without the

--prettyflag.[manager1]$ docker service inspect helloworld [ { "ID": "9uk4639qpg7npwf3fn2aasksr", "Version": { "Index": 418 }, "CreatedAt": "2016-06-16T21:57:11.622222327Z", "UpdatedAt": "2016-06-16T21:57:11.622222327Z", "Spec": { "Name": "helloworld", "TaskTemplate": { "ContainerSpec": { "Image": "alpine", "Args": [ "ping", "docker.com" ] }, "Resources": { "Limits": {}, "Reservations": {} }, "RestartPolicy": { "Condition": "any", "MaxAttempts": 0 }, "Placement": {} }, "Mode": { "Replicated": { "Replicas": 1 } }, "UpdateConfig": { "Parallelism": 1 }, "EndpointSpec": { "Mode": "vip" } }, "Endpoint": { "Spec": {} } } ]Run

docker service ps <SERVICE-ID>to see which nodes are running the service:[manager1]$ docker service ps helloworld NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS helloworld.1.8p1vev3fq5zm0mi8g0as41w35 alpine worker2 Running Running 3 minutesIn this case, the one instance of the

helloworldservice is running on theworker2node. You may see the service running on your manager node. By default, manager nodes in a swarm can execute tasks just like worker nodes.Swarm also shows you the

DESIRED STATEandCURRENT STATEof the service task so you can see if tasks are running according to the service definition.Run

docker pson the node where the task is running to see details about the container for the task.Tip: If

helloworldis running on a node other than your manager node, you must ssh to that node.[worker2]$ docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES e609dde94e47 alpine:latest "ping docker.com" 3 minutes ago Up 3 minutes helloworld.1.8p1vev3fq5zm0mi8g0as41w35

Scale the service in the swarm

Once you have deployed a service to a swarm, you are ready to use the Docker CLI to scale the number of containers in the service. Containers running in a service are called "tasks."

If you haven't already, open a terminal and ssh into the machine where you run your manager node. For example, the tutorial uses a machine named

manager1.Run the following command to change the desired state of the service running in the swarm:

$ docker service scale <SERVICE-ID>=<NUMBER-OF-TASKS>For example:

$ docker service scale helloworld=5 helloworld scaled to 5Run

docker service ps <SERVICE-ID>to see the updated task list:$ docker service ps helloworld NAME IMAGE NODE DESIRED STATE CURRENT STATE helloworld.1.8p1vev3fq5zm0mi8g0as41w35 alpine worker2 Running Running 7 minutes helloworld.2.c7a7tcdq5s0uk3qr88mf8xco6 alpine worker1 Running Running 24 seconds helloworld.3.6crl09vdcalvtfehfh69ogfb1 alpine worker1 Running Running 24 seconds helloworld.4.auky6trawmdlcne8ad8phb0f1 alpine manager1 Running Running 24 seconds helloworld.5.ba19kca06l18zujfwxyc5lkyn alpine worker2 Running Running 24 secondsYou can see that swarm has created 4 new tasks to scale to a total of 5 running instances of Alpine Linux. The tasks are distributed between the three nodes of the swarm. One is running on

manager1.Run

docker psto see the containers running on the node where you're connected. The following example shows the tasks running onmanager1:$ docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 528d68040f95 alpine:latest "ping docker.com" About a minute ago Up About a minute helloworld.4.auky6trawmdlcne8ad8phb0f1If you want to see the containers running on other nodes, ssh into those nodes and run the

docker pscommand.

Delete the service running on the swarm

The remaining steps in the tutorial don't use the hello-world service, so now you can delete the service from the swarm.

If you haven't already, open a terminal and ssh into the machine where you run your manager node. For example, the tutorial uses a machine named

manager1.Run

docker service rm helloworldto remove theHelloWorldservice.$ docker service rm helloworld helloworldRun

docker service inspect <SERVICE-ID>to verify that the swarm manager removed the service. The CLI returns a message that the service is not found:$ docker service inspect helloworld [] Status: Error: no such service: helloworld, Code: 1Even though the service no longer exists, the task containers take a few seconds to clean up. You can use

docker pson the nodes to verify when the tasks have been removed.Apply rolling updates to a service

In a previous step of the tutorial, you scaled the number of instances of a service. In this part of the tutorial, you deploy a service based on the Redis 3.0.6 container tag. Then you upgrade the service to use the Redis 3.0.7 container image using rolling updates.

If you haven't already, open a terminal and ssh into the machine where you run your manager node. For example, the tutorial uses a machine named

manager1.Deploy your Redis tag to the swarm and configure the swarm with a 10-second update delay. Note that the following example shows an older Redis tag:

$ docker service create \ --replicas 3 \ --name redis \ --update-delay 10s \ redis:3.0.6 0u6a4s31ybk7yw2wyvtikmu50You configure the rolling update policy at service deployment time.

The

--update-delayflag configures the time delay between updates to a service task or sets of tasks. You can describe the timeTis a combination of the number of secondsTs, minutesTm, or hoursTh. So10m30sindicates a 10-minute 30-second delay.By default, the scheduler updates 1 task at a time. You can pass the

--update-parallelismflag to configure the maximum number of service tasks that the scheduler updates simultaneously.By default, when an update to an individual task returns a state of

RUNNING, The scheduler schedules another task to update until all tasks are updated. If at any time during an update a task returnsFAILED, the scheduler pauses the update. You can control the behavior using the--update-failure-actionflag fordocker service createordocker service update.Inspect the

Redisservice:$ docker service inspect --pretty redis ID: 0u6a4s31ybk7yw2wyvtikmu50 Name: redis Service Mode: Replicated Replicas: 3 Placement: Strategy: Spread UpdateConfig: Parallelism: 1 Delay: 10s ContainerSpec: Image: redis:3.0.6 Resources: Endpoint Mode: vipNow you can update the container image for

redis. The swarm manager applies the update to nodes according to theUpdateConfigpolicy:$ docker service update --image redis:3.0.7 redis redisThe scheduler applies rolling updates as follows by default:

Stop the first task.

Schedule an update for the stopped task.

Start the container for the updated task.

If the update to a task returns

RUNNING, Wait for the specified delay period then start the next task.If, at any time during the update, a task returns

FAILED, pause the update.

Run

docker service inspects --pretty redisto see the new image in the desired state:$ docker service inspect --pretty redis ID: 0u6a4s31ybk7yw2wyvtikmu50 Name: redis Service Mode: Replicated Replicas: 3 Placement: Strategy: Spread UpdateConfig: Parallelism: 1 Delay: 10s ContainerSpec: Image: redis:3.0.7 Resources: Endpoint Mode: vipThe output of

service inspectshows if your update paused due to failure:$ docker service inspect --pretty redis ID: 0u6a4s31ybk7yw2wyvtikmu50 Name: redis ...snip... Update status: State: paused Started: 11 seconds ago Message: update paused due to failure or early termination of task 9p7ith557h8ndf0ui9s0q951b ...snip...To restart a paused update run

docker service update <SERVICE-ID>. For example:$ docker service update redisTo avoid repeating certain update failures, you may need to reconfigure the service by passing flags to

docker service update.Run

docker service ps <SERVICE-ID>to watch the rolling update:$ docker service ps redis NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR redis.1.dos1zffgeofhagnve8w864fco redis:3.0.7 worker1 Running Running 37 seconds \_ redis.1.88rdo6pa52ki8oqx6dogf04fh redis:3.0.6 worker2 Shutdown Shutdown 56 seconds ago redis.2.9l3i4j85517skba5o7tn5m8g0 redis:3.0.7 worker2 Running Running About a minute \_ redis.2.66k185wilg8ele7ntu8f6nj6i redis:3.0.6 worker1 Shutdown Shutdown 2 minutes ago redis.3.egiuiqpzrdbxks3wxgn8qib1g redis:3.0.7 worker1 Running Running 48 seconds \_ redis.3.ctzktfddb2tepkr45qcmqln04 redis:3.0.6 mmanager1 Shutdown Shutdown 2 minutes agoBefore Swarm updates all of the tasks, you can see that some are running

redis:3.0.6while others are runningredis:3.0.7. The output above shows the state once the rolling updates are done.What is Docker Volume?

Volumes

Volumes are the preferred mechanism for persisting data generated by and used by Docker containers. While bind mounts are dependent on the directory structure and OS of the host machine, volumes are completely managed by Docker. Volumes have several advantages over bind mounts:

Volumes are easier to back up or migrate than bind mounts.

You can manage volumes using Docker CLI commands or the Docker API.

Volumes work on both Linux and Windows containers.

Volumes can be more safely shared among multiple containers.

Volume drivers let you store volumes on remote hosts or cloud providers, to encrypt the contents of volumes, or to add other functionality.

New volumes can have their content pre-populated by a container.

Volumes on Docker Desktop have much higher performance than bind mounts from Mac and Windows hosts.

In addition, volumes are often a better choice than persisting data in a container's writable layer, because a volume doesn't increase the size of the containers using it, and the volume's contents exist outside the lifecycle of a given container.

If your container generates non-persistent state data, consider using a tmpfs mount to avoid storing the data anywhere permanently, and to increase the container's performance by avoiding writing into the container's writable layer.

Volumes use rprivate bind propagation, and bind propagation isn't configurable for volumes.

Choose the -v or --mount flag

In general, --mount is more explicit and verbose. The biggest difference is that the -v syntax combines all the options together in one field, while the --mount syntax separates them. Here is a comparison of the syntax for each flag.

If you need to specify volume driver options, you must use --mount.

-vor--volume: Consists of three fields, separated by colon characters (:). The fields must be in the correct order, and the meaning of each field isn't immediately obvious.In the case of named volumes, the first field is the name of the volume, and is unique on a given host machine. For anonymous volumes, the first field is omitted.

The second field is the path where the file or directory are mounted in the container.

The third field is optional, and is a comma-separated list of options, such as

ro. These options are discussed below.

--mount: Consists of multiple key-value pairs, separated by commas and each consisting of a<key>=<value>tuple. The--mountsyntax is more verbose than-vor--volume, but the order of the keys isn't significant, and the value of the flag is easier to understand.The

typeof the mount, which can bebind,volume, ortmpfs. This topic discusses volumes, so the type is alwaysvolume.The

sourceof the mount. For named volumes, this is the name of the volume. For anonymous volumes, this field is omitted. Can be specified assourceorsrc.The

destinationtakes as its value the path where the file or directory is mounted in the container. Can be specified asdestination,dst, ortarget.The

readonlyoption, if present, causes the bind mount to be mounted into the container as read-only. Can be specified asreadonlyorro.The

volume-optoption, which can be specified more than once, takes a key-value pair consisting of the option name and its value.

$ docker service create \ --mount 'type=volume,src=<VOLUME-NAME>,dst=<CONTAINER-PATH>,volume-driver=local,volume-opt=type=nfs,volume-opt=device=<nfs-server>:<nfs-path>,"volume-opt=o=addr=<nfs-address>,vers=4,soft,timeo=180,bg,tcp,rw"' --name myservice \ <IMAGE>

The examples below show both the --mount and -v syntax where possible, with --mount first.

Differences between -v and --mount behavior

As opposed to bind mounts, all options for volumes are available for both --mount and -v flags.

Volumes used with services, only support --mount.